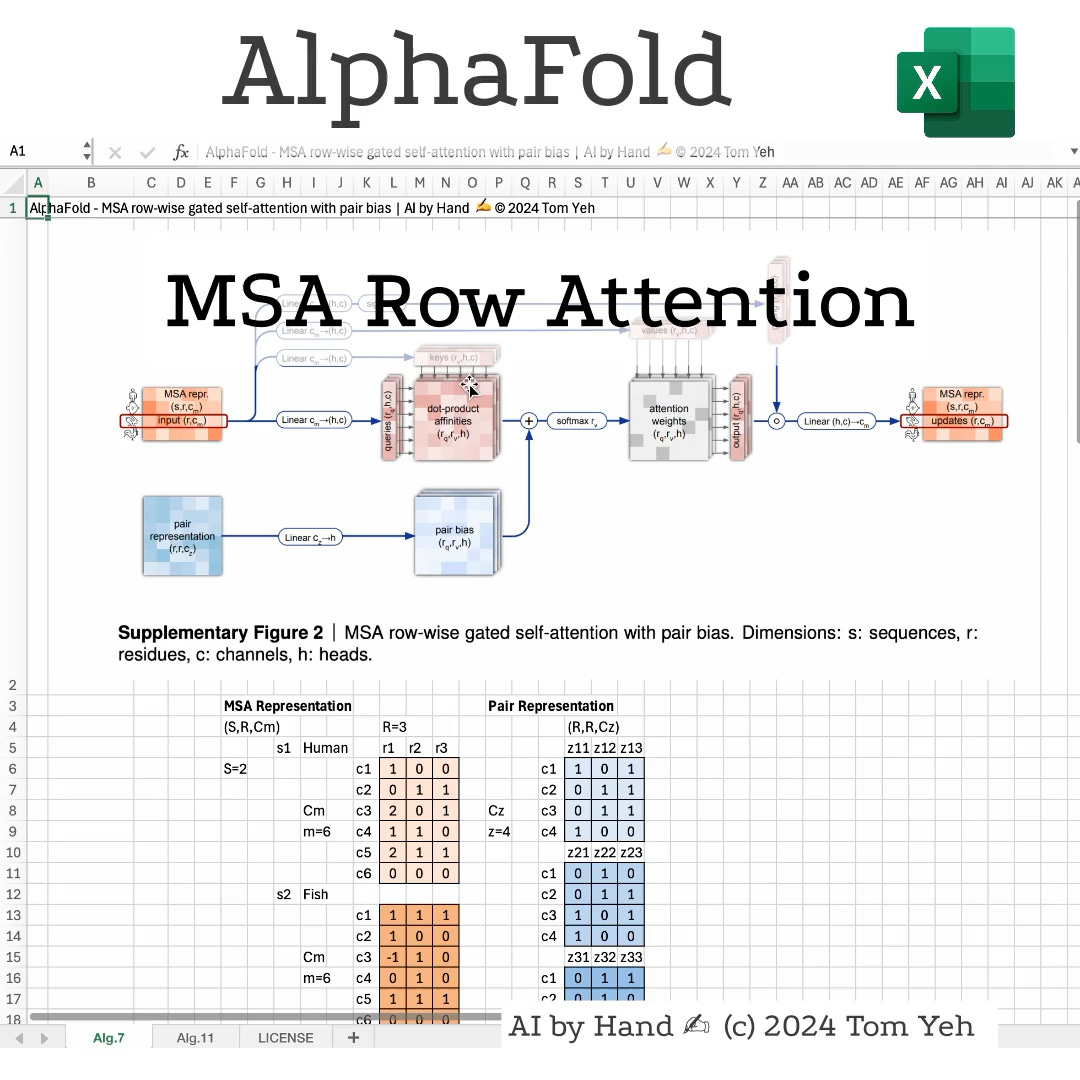

I designed this exercise to show you the calculation of the two key blocks in the EvoFormer model described in the AlphaFold paper: MSA Row-wise Multi-head Attention and Pair Triangular Update.

Background

For the first time I actually understand the Nobel Prize in Chemistry!

Back in high-school, I didn't do very well in chemistry. As a result, when I entered college, I avoided all chemistry courses. While many of my friends were struggling with the infamous "ochem" I was happily coding in c++, dealing with modules rather than molecules.

I decided it is time to study the AlphaFold paper published by John Jumper, Richard Evans, Alexander Pritzel in Nature in 2021. I was surprised I was able to follow most of the paper, since it is about deep learning architectures, models, algorithms, something I am quite familiar with. When I encountered chemistry or biology terms I do not understand, such as residue, co-revolution, protein folding...etc, I asked ChatGPT and it did a fine job explaining these terms to me.

Even though there are a dozen other algorithms involved, this exercise explains two of the more complex algorithms to can help you understand other simpler ones.

Play I Spy!

Can you find these operations that you may have already studied in other deep learning architectures?

• MLP: linear projection

• Self-Attention: dot product affinity, Softmax

• LSTM: gating

Hope this exercise helps you gain new insights into the impact of AI and deep learning on fields outside computer science!