U-Net, Transformer, Activation, Backpropagation, MLP

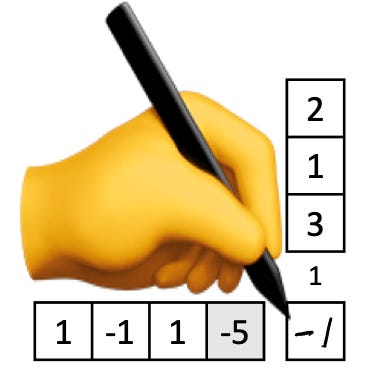

1. U-Net

U-Net: Can you calculate this by hand? ✍

by Tom Yeh

If you are using DALLE, Midjourney, or Stable Diffusion to generate images, you must know that under the hood is an U-Net, plus various add-ons such as attention, skip connections, diffusion, and conditioning.

In this exercise, I use 1x1 convolution layers to simplify hand calculation, without loss of generality.

Download the document:

Read the original LinkedIn post:

https://www.linkedin.com/feed/update/urn:li:ugcPost:7174055355784515584/

2. Transformer

Deep Dive into Transformers by Hand ✍ - Explore the details behind the power of transformers

by Srijanie Dey

There has been a new development in our neighborhood.

A ‘Robo-Truck,’ as my son likes to call it, has made its new home on our street.

It is a Tesla Cyber Truck and I have tried to explain that name to my son many times but he insists on calling it Robo-Truck. Now every time I look at Robo-Truck and hear that name, it reminds me of the movie Transformers where robots could transform to and from cars.

And isn’t it strange that Transformers as we know them today could very well be on their way to powering these Robo-Trucks? It’s almost a full circle moment. But where am I going with all these?

Well, I am heading to the destination — Transformers. Not the robot car ones but the neural network ones. And you are invited!

Read the full article: https://towardsdatascience.com/deep-dive-into-transformers-by-hand-%EF%B8%8E-68b8be4bd813

3. Activation

Activation: AI by Hand ✍ Workbook - 25 Exercises 🏋️

by Tom Yeh

This workbook is comprised of 25 exercises. Every exercise features one or two "missing" values, highlighted in a soft shade of blue or red.

Can you calculate these missing values by hand? ✍

Download the workbook:

4. Backpropagation

Backpropagation and Gradient Descent: The Backbone of Neural Network Training

👋 Hi there! In this seventh installment of my Deep Learning Fundamentals Series, lets explore more and finally understand backpropagation and gradient descent. These two concepts are like the dynamic duo that makes neural networks learn and improve, kind of like a brain gaining superpowers! What makes them so mysterious… and how do they work together to make neural networks so powerful?

Read the full blog article and try the interactive tutorial:

https://jeremylondon.com/blog/deep-learning-basics-deciphering-backpropagation/

5. Multi Layer Perceptron (MLP)

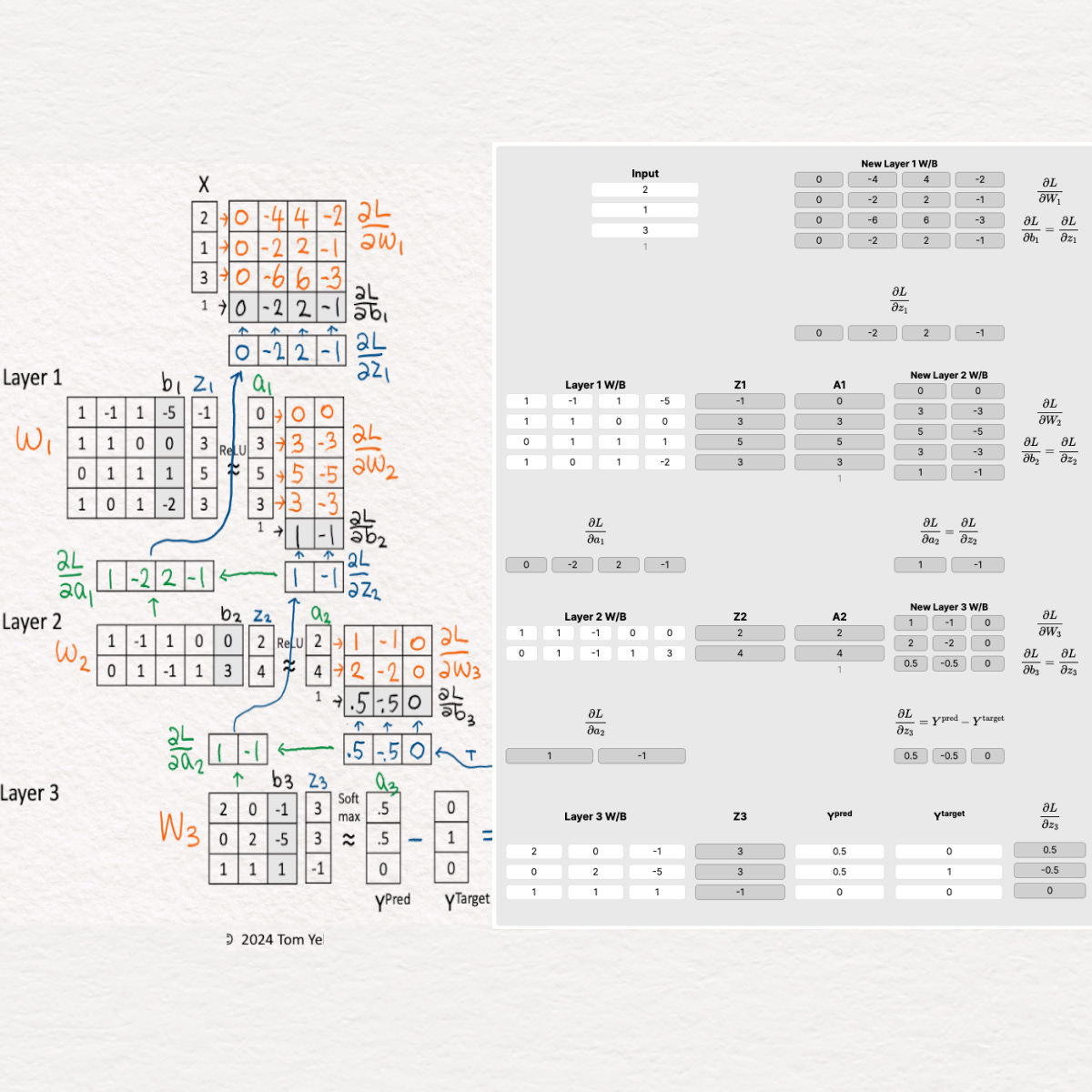

Multi Layer Perceptron (MLP) - AI by Hand ✍ with Anna (3:35)

by Anna Rahn

This is the fifth walkthrough video in our "AI by Hand" series about explainable deep learning based on the materials developed by Professor Tom Yeh. We take all the building blocks we covered earlier - such as hidden layers and batches - and piece them together to solve more complex architectures like seven-layer perceptrons.

Watch the Youtube Video:

If you liked this newsletter, please forward it to your friends and colleagues.